Program tuning as a resource allocation problem

As the saying goes, every problem in CS can be solved with another level of indirection (except the problem of too much indirection) This often leads to caching to improve performance. Remember some results to handle them faster. Caches are everywhere in computing these days: from the CPU itself (memory caches, TLBs, instruction caches, branch prediction), to databases (query caches, table caches), networking stacks (neighbor caches, routing caches, DNS caches) and IO (directory caches, OS page buffering). When I say cache here I mean this generalized cache concept, not just the caches of the CPUs.

Caches usually improve performance. But only if your cache hit rates are high enough (and the cache latency low enough, but that’s a different discussion). So if you thrash the caches and hit rate becomes very low performance suffers. This is a problem that theoretical CS algorithm analysis largely ignored for a long time, but there has some work on this been recently (like cache oblivious algorithms).

However this is only for the CPU caches, not for all the other caches that exist in a modern programming environment.

A cache is a shared resource in a program. Typically programs consist of many subsystems and libraries. They all impact various caches. They are likely written by different developers. Calling a library means whoever wrote that library shares cache resources with you.

Now when tuning some sub function you often have a trade off between simplicity and performance. A common technique to improve performance is to replace computation with table lookups (despite the memory wall it is often still the fastest way). This will (usually) improve performance, but increase the cache foot print if the input values vary enough to cover larger parts of the table. Or you could add a cache, which is essentially an automatic table lookup. This will make the function faster, but also increase its resource foot print in the shared cache resources.

As a side note anything with a table lookup is much harder to tune in a micro benchmark. If the foot print of a function is data independent we can just call the function in a loop with the same input to and measure the total time, divided by the iterations. But if the foot print is data dependent we always need a representative input set, otherwise the unrealistic cache hit rate on the table skews the results badly.

Even if no table lookup is used more complex logic will likely have more overhead in the instruction caches and branch prediction caches. So common optimizations often increase the foot print. But when the total program already has a large foot print increasing the total further may cause other time critical subsystems start thrashing their working set.

Similar reasoning applies to other kinds of caches: a database sub function may thrash the database engine query or table cache. A network subsystem may thrash the DNS cache. A 3d sub function may thrash the 3d stacks JIT code cache.

So you could say that the foot print of a function should not be higher than the proportion of runtime it executes. If there are free resources it may be reasonable to take more than that, but even then it may be better to be frugal because the increase resource usage will not pay off in better performance. Using less resources may also save power or leave more resources for other programs sharing the same system (for power that’s not always true, having better performance may help you in the race to idle)

So we may end up with an interesting trade off when tuning a function. We have the choice between different algorithms with different resource footprints (e.g. table lookup vs computation). But the choice which one to use depends on the rest of the program: whether the function is time critical enough and how much resources are used elsewhere.

So this is a nasty problem. Instead of being able to tune each function individually we actually have a global optimization problem over the whole program. The problem of resource allocation is non decomposable. That is when you change one small piece in a big program it may affect everyone else. When the program changes and increases resource usage somewhere a lot of resource allocation may need to be redone to re-balance, which may include changing algorithms of some old subsystem.

This is especially a problem for library functions where the program author doesn’t really know what the calling program does. And on the other hand the library user didn’t write the library and doesn’t know what the library author optimized for.

One way would be to offer multiple variants optimized for different resource consumptions, so that the user can chose. This would be similar to e.g. the algorithms in the C++ STL are tagged with their algorithmic complexity for different operations. One could imagine a library of algorithms that are tagged with their resource overhead. This likely would need to be equations based on the input set size. Also since there are multiple resources (e.g. memory hierarchy, branch predictor, other software caches) it may need multiple indicators.

Modern caches are generally so complex that they are nearly impossible to analyze “on paper” (especially when you have multiple level interacting), and need measurements instead.

So developing metrics for this would be interesting. Since the costs are paid elsewhere (you cause a cache miss somewhere else) it is hard to associate the two by classic profiling. We have no way to directly monitor the various caches. For software caches this could be improved by adding better tracing capabilities, but for hardware it’s harder.

One way would be subtractive monitoring, similar to cyclesoak. You run a “cachesoak” in parallel to the program and it touches memory and using time measurements measures how much of its working set has been displaced by another program running on the same core.

This technique has been used for attacking cryptographic algorithms, by exploiting the cache access patterns of table lookups in common ciphers, or with OS paging starting in the 70ies for password attacks

One could also use this subtractive technique for other software caches. For example for a database access run a background thread that accesses the database in a controlled way and measures access times. The challenge of course would be to understand the caching behavior of the database well enough to get useful data.

This all still doesn’t tell you where the resource consumption happens. So to measure the resource consumption of each subsystem in a complex program you would need to run each of it individually against a cyclesoak test. And ideally with a representative input data set, otherwise you may get unrealistic access patterns.

I mostly talked about memory hierarchy caches here, but of course this applies to other caches too. An interesting case is branch prediction caches in CPUs. A mispredicted branch can take take nearly an order of magnitude more time than a predicted branch. Indirect branches need more resources than conditional branches (full address versus single bit). I wrote about optimizing conditional branches earlier. So a less complex algorithm may be slower, but use less resources.

It would be interesting to develop a metric for the branch prediction resource consumption. One way may be to use a variant of cyclomatic complexity, but focus on the hot paths of the program only using profile feedback.

So overall allocating cache resources to different sub systems is hard. It would be good if we had better tools for this.

Efficient n-states on x86 systems

Programs often have flags that affect the control flow. The simplest case is a boolean that can be either TRUE or FALSE (normally 1 and 0). The x86 ISA uses condition codes to test and do a conditional jumps. It has a direct flag to test for zero, so that a test for zero and non zero can be efficiently implemented.

if (flag == 0) case_1();

else case_2();

In assembler this will look something like this (don’t worry if you don’t know assembler, just read the comments):

test $-1,flag ; and flag with -1 and set condition codes

jz is_zero ; jump to is_zero if flag was zero

; flag was non zero here

But what happens when flag can have more than two states (like the classic true, false, file_not_found). How can we implement such a test in the minimum number of instructions? A common way is to use a negative value (-1) for the third state. x86 has a condition code for negative, so the code becomes simply

if (flag == 0) case_1();

else if (flag < 0) case_2();

else /* > 0 */ case_3();

(if you try that in gcc use a call, not just a value for the differing control flows, otherwise the compiler may use tricks to implement this without jumps)

In assembler this looks like

testl $-1,flag ; and flag with -1 and set condition codes

jz case_1 ; jump if flag == 0

js case_2 ; jump if flag < 0

; flag > 0 here

Now how do we handle four states with a single test and a minimum number of jumps? Looking at the x86 condition tables it has more condition codes. One interesting but obscure one is the parity flag. Parity is true if the number of bits set is even. In x86 it also only applies to the lowest (least-significant) byte of the value.

Parity used to be used for 7bit ASCII characters to detect errors, but adding a parity bit in the 8th bit that makes the parity always even (or odd, I forgot). These days this is mostly obsolete and usually replaced with better codes that can not only detect, but also correct errors.

Back to the n-value problem. So we can use an additional positive value that has an even parity, that is an even number of bits, like 3 (0b11). The total values now are: -1, 0, 1, 3

In assembler this a straight forward additional test for “p”:

testl $-1,flag ; and flag with -1 and set condition codes

jz case_1 ; jump if flag == 0

js case_2 ; jump if flag < 0

jp case_3 ; jump if flag > 0 and flag has even parity

; flag > 0 and has odd parity

Note that the 3 would be only detected in the lowest byte, so all 32bit values with 3 in the lowest 8bit would be equivalent to 3. That is also true for all negative values which are the same as -1. Just 0 is lonely.

Now how to implement this in C if you don’t want to write assembler (or not writing a compiler or JIT)? gcc has a __builtin_parity, function but unfortunately it is defined as parity on 4 bytes, not a single byte. This means it cannot map directly to the “p” condition code.

One way is to use the asm goto extension that is available in gcc 4.5 and use inline assembler. With asm goto an inline assembler statement can change control flow and jump directly to C labels.

asm goto(

" testl $-1,%0\n"

" jz %l1\n"

" js %l2\n"

" jp %l3" :: "rm" (flag) :: lzero, lneg, lpar);

/* flag > 0, odd parity here (1) */

lzero: /* flag == 0 here (0) */

lneg: /* flag < 0 here (-1) */

lpar: /* flag > 0 (3) */

This works and could be packaged into a more generic macro. The disadvantage of course is — apart from being non portable — that the compiler cannot look into the inline assembler code. If flag happens to be a constant known to the compiler it won’t be able to replace the test with a direct jump.

Can this be extended to more than four states? We could add a fifth negative state with two negative numbers that have even and odd parity respective. This would require the negative cases to go through two conditional jumps however: first to check if the value is negative and then another to distinguish the two parity cases.

It’s not clear that is a good idea. Some older x86 micro architectures have limits on the number of jumps in a 16byte region that can be branch predicted (that is why gcc sometimes add weird nops for -mtune=generic). Losing branch prediction would cost us far more than we could gain with any optimization here.

Another approach would be to use a cmp (subtraction) instead of a test. In this case the overflow and carry condition codes would be also set. But it’s not clear if that would gain us any more possibilities.

Update 12/26: Fix typo

A calculator for PCMPSTR

String processing is quite common in software. This can be simple operations like searching for characters in a string, more complex subset and sub string searches to complex transformations. Most string processing code processes strings byte by byte (or 16bit word by word for 16bit unicode). That is fine for code that is not time critical.

A modern CPU can process 32bit-256bit at a time inside a core, if it does only 8 bit in each operation then large parts of the computing capacity are unused. If the string code processes a lot of data and slows down the program it’s better to find some way to use this unused computing capability. I’m only talking about a single core here (thread level parallelism), not multi-core.

For simple standard algorithms like strlen there are algorithms available that allow to process the string in 32bit or 64bit units using various bit manipulation tricks. (Hacker’s delight has all the details) Typically C libraries already use optimized algorithms (although the compiler may not always use the standard library if you just call strlen). But they are usually too difficult to use for custom string processing.

Also they usually need special cases to handle the end of the string (the length of a string is often not known in advance) and are complicated to validate and write. Also typically is only a win for relatively simple algorithms, and come at a heavy cost of programer time.

On modern x86 CPUs an alternative is to use the SSE 4.2 string instructions PCMP*STR*. They are essentially programmable comparison engines to compare two 128bit vectors at a time. They can handle string end detection and various other cases directly. They are very powerful, but since they are so configurable also hard to use (2^9 possible combinations). The hardware can do all these operations in parallel, so it’s more efficient than a explicit loop.

These instructions are much easier to use than the old bit twiddling tricks, but they are still hard.

They operate on two 128 bit vector inputs and compare all the 8bit (or 16bit) pairs based on a 8bit configuration flag word. The input can be 0 terminated strings or explicit lengths for both vectors. The output is either a vector mask or a index, plus some conditional codes.

The instructions can be either used from assembler, or using C intrinsics.

To make this all easier to use I did the PCMPSTR calculator that allows to interactively configure and explore these instructions. It generates snippets that can be used in programs.

Feedback welcome and let me know if you find a new interesting use with this.

Some tips for tuning

- Always profile first to see if something is time critical. Don’t bother with code that does not show up in the profiler

- Understand what’s common and uncommon in typical input data

- Always have a reference version of the algorithm that does things in a straight forward matter and is optimized for clarity, not speed. First write the program using the reference code and profile before tuning. Have a debug build that always compares the output of reference and optimized.

- Make sure the reference version stays up-to-date when the program changes.

IO affinity in numactl

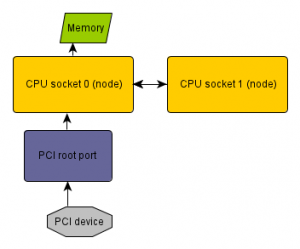

When doing IO tuning on Linux servers it’s common to bind memory to specific NUMA nodes to optimize the memory access of a IO device.

You may want to minimize the memory access latency, then the memory used by the device has to be near the PCI root port that connects the device.

This optimizes for the IO device, alternatively you could also optimize for the CPU and putting the memory near the node where the code that accesses the data runs. But let’s talk about optimizing for the device here, as when you’re more IO bound than CPU bound.

On a system with distributed PCI Express ports (like recent Intel servers in the picture above) this requires knowing which node the PCI device is connected to. ACPI has a method to export this information from the BIOS to the OS (_PXM). Unfortunately not all BIOS implement this. But when it’s implemented, numactl 2.0.8 now can directly discover this information, so you don’t have to do it manually.

So for example to bind a program to the node of the device eth0 you can now write

numactl --membind netdev:eth0 --cpubind netdev:eth0 ...

Or to bind to the device that routes 10.0.0.1

numactl --membind ip:10.0.0.1 --cpubind ip:10.0.0.1 ...

Or to bind to the device that contains a specific disk file

numactl --membind file:/path/file --cpubind file:/path/file ...

Other methods are block: for block devices and pci: for specifying PCI devices

The resolution will only work currently when there is only a single level in the IO stack. So when the block device is a software RAID or a LVM, or the network device is a VPN, numactl doesn’t know how resolve the underlying devices

(and it may not be unique anyways if there are multiple underlying devices). In this case it will work to specify the underlying device directly.

The resolution also only works when the BIOS exports this information. When this is not the case you either have a buggy BIOS or a system which has no distributed PCI express ports.

Note the numactl download server currently seems to be down. I hope they fix that soon. But the ftp site for numactl still works.

Update: add working download and fix numactl version.

epoll and garbage collection

epoll, xor lists, pointer compression. What do they have in common? They all don’t play well with automatic garbage collection.

I recently wrote my first program using epoll. And I realized that epoll does not play well with garbage collection. It is one of the few (only?) system calls where the kernel saves a pointer for user space. It supports stuffing other data in there too, like the fd, but for anything non trivial you typically need some state per connection, thus a pointer.

A garbage collector relies on identifying all pointers to an object, so that it can decide whether the object is still used or not.

Now normally user programs should have some other reference to implement time outs and similar. But if they don’t and the only reference is stored in the kernel and the garbage collector comes in at the wrong time the connection object will be garbage collected, even though the connection is still active. This is because the garbage collector cannot see into the kernel.

Something to watch out for.

The weekend error anomaly

I run mcelog.org which describes the standard way in Linux to handle machine check errors. Most of the hits of the website are just people typing a error log into a search engine mcelog.org ranks quite high on Linux machine check related terms.

The log files give me some indication how many errors are occurring on Linux systems in the field. Most of the errors are corrected memory errors on systems with ECC memory: a bit flipped, but the ECC code corrected it and and no actual data corruption occurred. (In this sense they are not actually errors, a more correct term would be “events”). Other errors like network errors or disk errors are not logged by mcelog.

I noticed is that there seem to be less memory errors on weekends. Normally the distribution of hits is fairly even over the week. But on Saturday and Sunday it drops into half.

It’s interesting to speculate why this this weekend anomaly happens.

ECC memory is normally only on server systems, which should be running 24h. In principle errors should be evenly distributed over the whole week.

Typing the error into google is no automated procedure. A human has to read the log files and do it manually.

If people are more likely to do this on work days one would expect that they would catch up on the errors from the weekend on Monday. So Monday should have more hits. But that’s not in the data: Monday is not different from other weekdays.

It’s also sticky for each system (or rather each human googling). Presumably the person will google the error only once no matter how many errors their system have and after that “cache” the knowledge what the error means. So the mcelog.org hits are more a indication of “first memory error in the career of a system administrator” (assuming the admin has perfect memory, which may be a bold assumption). But given a large enough supply of new sysadmins this should be still a reasonable indication of the true number of errors (at least on the systems likely to be handled by rookie administrators)

The hour distribution is more even, with 9-10 slightly higher. Not sure which time zone and what that means on the geographical distribution of errors and rookie admins.

One way to explain the weekend anomaly could be that that servers may be more busy on weekdays and they may have more errors when they are busy. Are these two assumptions true? I don’t know. It would be interesting to know if this shows up in other peoples large scale error collections too.

I wonder if it’s possible to detect solar flares in these logs. Need to find a good data source for them. Are there any other events generating lots of radiation that may affect servers? I hope there will never be a nuke or a super nova blast in the data.

Longterm 2.6.35.13 kernel released

A new longterm 2.6.35.13 Linux kernel is released. This version contains security fixes and everyone using 2.6.35 is encouraged to update.

tarball, patch, incremental patch against 2.6.35.12, ChangeLog

A Linux longterm 2.6.35.12 kernel has been released.

This release contains security fixes and everyone is encouraged to update. Thanks to all contributors.

Full tarball linux-2.6.35.12.tar.gz

SHA1: 71bd9d5af3493c80d78303901d4c28b3710e2f40

Patch against 2.6.35: patch-2.6.35.12.gz

SHA1: 30305ebca67509470a6cc2a80767769efdd2073e

Patch against 2.6.35.11 patch-2.6.35.11-12.gz

SHA1: e2c30774474f0a3f109a8cb6ca2a95f647c196b8

Git tree: git://git.kernel.org/pub/scm/linux/kernel/git/longterm/linux-2.6.35.y.git

The Linux longterm kernel 2.6.35.11 has been released

The 2.6.35.11 longterm kernel is out.

Patches and tarball at kernel.org or from the git tree. Full

ChangeLog.

Thanks to all contributors.

More ergonomics – desktop lightning: redshift

A cool new desktop ergonomics tool I started using recently is redshift. redshift is a clone of the f.lux package, which also has Mac and Windows versions (There’s also another fork of redshift called redshift-gui, but I’m using the plain redshift which works fine for me)

Side note: some corporate firewalls seem to block the f.lux page because it contains flux minus dots (like the botnet?)

The basic idea is to adjust the light temperature of your screen to your inner clock and vice versa. Your inner clock running in a circadian rhythm regulates your sleeping schedule, body temperature and lots of other things. The human clock (or rather the clock of near all animals) does not have an exact 24hour period, but runs slightly slower. To keep your rhythm aligned to the day it regularly needs to be re-adjusted by a zeitgeber. This is normally done using day light input to your eyes, using brightness and light temperature (red for evening etc.)

Now the problem with people like me (and likely you) who do stare for long times into computer screens is that the lightening does not change there, unlike natural light. The light temperature stays cold like at noon, even if it’s in the middle of the night. This doesn’t give any cues to your internal zeitgeber.

You configure f.lux/redshift with your position and they compute the current position of the sun and adjust the light temperature of the screen based on that. This generally means that the screen gets more and more red towards the evening. In the night it stays still red

(I guess that’s not fully natural because real night is dark, but then a dark screen wouldn’t be very practical. Perhaps we had a few hundred thousand years to adjust to staring into red fire at night though, but I don’t want to get into evolutionary psychology style just so stories here)

This should make you more sleepy towards the evening and keep your sleep rhythm nearer normal day/night.

Does it actually work? I’m not sure, but I like it at least. (but perhaps that’s just because I don’t mind my screen having a red taint — you can tell what times of the day I prefer for work) Informally at least my sleep rhythm didn’t get worse from using it, and may be slightly better. I also like the visual indication of the time.

I guess I should do a comparison study and keep some data on the sleep rhythm while using redshift and not using it, but so far I haven’t had the energy to really set up such a experiment.

The f.lux page has some more theory and references.

One problem right now is that the X server gamma adjustment does not cover the mouse, so the mouse pointer in cold colors looks a bit out of place towards the evening. I wonder how hard it would be to fix that?

I also sometimes have to turn it off when viewing pictures or movies, but only rarely.

And when you travel you don’t have to forget to adjust the coordinates.

Interesting side question: how to set up the tool when you use the laptop during an intercontinential flight? Could it help with jetlag when used the right way? But how?