IO affinity in numactl

When doing IO tuning on Linux servers it’s common to bind memory to specific NUMA nodes to optimize the memory access of a IO device.

You may want to minimize the memory access latency, then the memory used by the device has to be near the PCI root port that connects the device.

This optimizes for the IO device, alternatively you could also optimize for the CPU and putting the memory near the node where the code that accesses the data runs. But let’s talk about optimizing for the device here, as when you’re more IO bound than CPU bound.

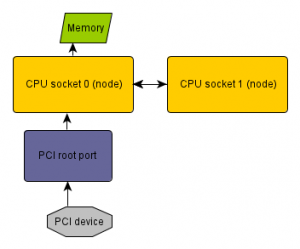

On a system with distributed PCI Express ports (like recent Intel servers in the picture above) this requires knowing which node the PCI device is connected to. ACPI has a method to export this information from the BIOS to the OS (_PXM). Unfortunately not all BIOS implement this. But when it’s implemented, numactl 2.0.8 now can directly discover this information, so you don’t have to do it manually.

So for example to bind a program to the node of the device eth0 you can now write

numactl --membind netdev:eth0 --cpubind netdev:eth0 ...

Or to bind to the device that routes 10.0.0.1

numactl --membind ip:10.0.0.1 --cpubind ip:10.0.0.1 ...

Or to bind to the device that contains a specific disk file

numactl --membind file:/path/file --cpubind file:/path/file ...

Other methods are block: for block devices and pci: for specifying PCI devices

The resolution will only work currently when there is only a single level in the IO stack. So when the block device is a software RAID or a LVM, or the network device is a VPN, numactl doesn’t know how resolve the underlying devices

(and it may not be unique anyways if there are multiple underlying devices). In this case it will work to specify the underlying device directly.

The resolution also only works when the BIOS exports this information. When this is not the case you either have a buggy BIOS or a system which has no distributed PCI express ports.

Note the numactl download server currently seems to be down. I hope they fix that soon. But the ftp site for numactl still works.

Update: add working download and fix numactl version.