Archive for the ‘kernel’ Category

A calculator for PCMPSTR

String processing is quite common in software. This can be simple operations like searching for characters in a string, more complex subset and sub string searches to complex transformations. Most string processing code processes strings byte by byte (or 16bit word by word for 16bit unicode). That is fine for code that is not time critical.

A modern CPU can process 32bit-256bit at a time inside a core, if it does only 8 bit in each operation then large parts of the computing capacity are unused. If the string code processes a lot of data and slows down the program it’s better to find some way to use this unused computing capability. I’m only talking about a single core here (thread level parallelism), not multi-core.

For simple standard algorithms like strlen there are algorithms available that allow to process the string in 32bit or 64bit units using various bit manipulation tricks. (Hacker’s delight has all the details) Typically C libraries already use optimized algorithms (although the compiler may not always use the standard library if you just call strlen). But they are usually too difficult to use for custom string processing.

Also they usually need special cases to handle the end of the string (the length of a string is often not known in advance) and are complicated to validate and write. Also typically is only a win for relatively simple algorithms, and come at a heavy cost of programer time.

On modern x86 CPUs an alternative is to use the SSE 4.2 string instructions PCMP*STR*. They are essentially programmable comparison engines to compare two 128bit vectors at a time. They can handle string end detection and various other cases directly. They are very powerful, but since they are so configurable also hard to use (2^9 possible combinations). The hardware can do all these operations in parallel, so it’s more efficient than a explicit loop.

These instructions are much easier to use than the old bit twiddling tricks, but they are still hard.

They operate on two 128 bit vector inputs and compare all the 8bit (or 16bit) pairs based on a 8bit configuration flag word. The input can be 0 terminated strings or explicit lengths for both vectors. The output is either a vector mask or a index, plus some conditional codes.

The instructions can be either used from assembler, or using C intrinsics.

To make this all easier to use I did the PCMPSTR calculator that allows to interactively configure and explore these instructions. It generates snippets that can be used in programs.

Feedback welcome and let me know if you find a new interesting use with this.

Some tips for tuning

- Always profile first to see if something is time critical. Don’t bother with code that does not show up in the profiler

- Understand what’s common and uncommon in typical input data

- Always have a reference version of the algorithm that does things in a straight forward matter and is optimized for clarity, not speed. First write the program using the reference code and profile before tuning. Have a debug build that always compares the output of reference and optimized.

- Make sure the reference version stays up-to-date when the program changes.

IO affinity in numactl

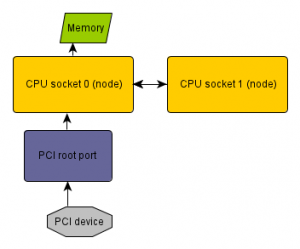

When doing IO tuning on Linux servers it’s common to bind memory to specific NUMA nodes to optimize the memory access of a IO device.

You may want to minimize the memory access latency, then the memory used by the device has to be near the PCI root port that connects the device.

This optimizes for the IO device, alternatively you could also optimize for the CPU and putting the memory near the node where the code that accesses the data runs. But let’s talk about optimizing for the device here, as when you’re more IO bound than CPU bound.

On a system with distributed PCI Express ports (like recent Intel servers in the picture above) this requires knowing which node the PCI device is connected to. ACPI has a method to export this information from the BIOS to the OS (_PXM). Unfortunately not all BIOS implement this. But when it’s implemented, numactl 2.0.8 now can directly discover this information, so you don’t have to do it manually.

So for example to bind a program to the node of the device eth0 you can now write

numactl --membind netdev:eth0 --cpubind netdev:eth0 ...

Or to bind to the device that routes 10.0.0.1

numactl --membind ip:10.0.0.1 --cpubind ip:10.0.0.1 ...

Or to bind to the device that contains a specific disk file

numactl --membind file:/path/file --cpubind file:/path/file ...

Other methods are block: for block devices and pci: for specifying PCI devices

The resolution will only work currently when there is only a single level in the IO stack. So when the block device is a software RAID or a LVM, or the network device is a VPN, numactl doesn’t know how resolve the underlying devices

(and it may not be unique anyways if there are multiple underlying devices). In this case it will work to specify the underlying device directly.

The resolution also only works when the BIOS exports this information. When this is not the case you either have a buggy BIOS or a system which has no distributed PCI express ports.

Note the numactl download server currently seems to be down. I hope they fix that soon. But the ftp site for numactl still works.

Update: add working download and fix numactl version.

epoll and garbage collection

epoll, xor lists, pointer compression. What do they have in common? They all don’t play well with automatic garbage collection.

I recently wrote my first program using epoll. And I realized that epoll does not play well with garbage collection. It is one of the few (only?) system calls where the kernel saves a pointer for user space. It supports stuffing other data in there too, like the fd, but for anything non trivial you typically need some state per connection, thus a pointer.

A garbage collector relies on identifying all pointers to an object, so that it can decide whether the object is still used or not.

Now normally user programs should have some other reference to implement time outs and similar. But if they don’t and the only reference is stored in the kernel and the garbage collector comes in at the wrong time the connection object will be garbage collected, even though the connection is still active. This is because the garbage collector cannot see into the kernel.

Something to watch out for.

The weekend error anomaly

I run mcelog.org which describes the standard way in Linux to handle machine check errors. Most of the hits of the website are just people typing a error log into a search engine mcelog.org ranks quite high on Linux machine check related terms.

The log files give me some indication how many errors are occurring on Linux systems in the field. Most of the errors are corrected memory errors on systems with ECC memory: a bit flipped, but the ECC code corrected it and and no actual data corruption occurred. (In this sense they are not actually errors, a more correct term would be “events”). Other errors like network errors or disk errors are not logged by mcelog.

I noticed is that there seem to be less memory errors on weekends. Normally the distribution of hits is fairly even over the week. But on Saturday and Sunday it drops into half.

It’s interesting to speculate why this this weekend anomaly happens.

ECC memory is normally only on server systems, which should be running 24h. In principle errors should be evenly distributed over the whole week.

Typing the error into google is no automated procedure. A human has to read the log files and do it manually.

If people are more likely to do this on work days one would expect that they would catch up on the errors from the weekend on Monday. So Monday should have more hits. But that’s not in the data: Monday is not different from other weekdays.

It’s also sticky for each system (or rather each human googling). Presumably the person will google the error only once no matter how many errors their system have and after that “cache” the knowledge what the error means. So the mcelog.org hits are more a indication of “first memory error in the career of a system administrator” (assuming the admin has perfect memory, which may be a bold assumption). But given a large enough supply of new sysadmins this should be still a reasonable indication of the true number of errors (at least on the systems likely to be handled by rookie administrators)

The hour distribution is more even, with 9-10 slightly higher. Not sure which time zone and what that means on the geographical distribution of errors and rookie admins.

One way to explain the weekend anomaly could be that that servers may be more busy on weekdays and they may have more errors when they are busy. Are these two assumptions true? I don’t know. It would be interesting to know if this shows up in other peoples large scale error collections too.

I wonder if it’s possible to detect solar flares in these logs. Need to find a good data source for them. Are there any other events generating lots of radiation that may affect servers? I hope there will never be a nuke or a super nova blast in the data.

Longterm 2.6.35.13 kernel released

A new longterm 2.6.35.13 Linux kernel is released. This version contains security fixes and everyone using 2.6.35 is encouraged to update.

tarball, patch, incremental patch against 2.6.35.12, ChangeLog

A Linux longterm 2.6.35.12 kernel has been released.

This release contains security fixes and everyone is encouraged to update. Thanks to all contributors.

Full tarball linux-2.6.35.12.tar.gz

SHA1: 71bd9d5af3493c80d78303901d4c28b3710e2f40

Patch against 2.6.35: patch-2.6.35.12.gz

SHA1: 30305ebca67509470a6cc2a80767769efdd2073e

Patch against 2.6.35.11 patch-2.6.35.11-12.gz

SHA1: e2c30774474f0a3f109a8cb6ca2a95f647c196b8

Git tree: git://git.kernel.org/pub/scm/linux/kernel/git/longterm/linux-2.6.35.y.git

The Linux longterm kernel 2.6.35.11 has been released

The 2.6.35.11 longterm kernel is out.

Patches and tarball at kernel.org or from the git tree. Full

ChangeLog.

Thanks to all contributors.

Big kernel lock semantics

My previous post on BKL removal received some comments about confusing and problematic semantics of the big kernel log. Does the BKL really have weird semantics?

Again some historical background: the original Unix and Linux kernels were written for single processor systems. They employed a very simple model to avoid races accessing data structures in the kernel. Each process running in kernel code owns the CPU until it yields explicitly (I ignore interrupts here, which complicate the model again, but are not substantial to the discussion). That’s a classical cooperative multi-tasking or coroutine model. It worked very well, after all both Unix and Linux were very successful.

Coroutines are a established programming model: in many scripting languages they are used to implement iterators or generators. They are also used for lots of other purposes, with many libraries being available to implement them for C.

Later when Linux (and Unix) were ported to multi-processor systems this model was changed and replaced with explicit locks. In order to convert the whole kernel step by step the big kernel lock was introduced which emulates the old cooperative multi-tasking model for code running under it. Only a single process can take the big kernel lock at a time, but it is automatically dropped when the process sleeps and reaquired when it wakes up again.

So essentially BKL semantics are not “weird”: they are just classic Unix/Linux semantics.

Good literature on the topic is the older Unix Systems for Modern Architectures book by Curt Schimmel, which provides a survey of the various locking schemes to convert a single processor kernel into a SMP system.

When a subsystem is converted from the BKL it is often replaced by a mutex code lock. A single mutex protects the complete subsystem. This locking model is not fully equivalent with the BKL: the BKL is dropped when code sleeps, while the mutex will continue serializing even over the sleep region. Often this doesn’t matter: a lot of common kernel utility functions may sleep, but in practice do only rarely in exceptional cases. But sometimes sleeping is actually common — for example when doing disk IO — and in this case the straight forward mutex conversion can seriously limit parallelism. The old BKL code was able to run the sleeping regions in parallel. The mutex version is not. For example I believe this was a performance regression in the early versions of Frederic Weisbecker’s heroic reiserfs BKL conversion.

With all this I don’t want to say that removing the BKL is bad: I actually did some own work on this and it’s generally a good thing. But it does not necessarily give you immediate advantages and may even result in slower code at first.

Removing the big kernel lock. A big deal?

There’s a lot of hubbub recently about removing the big kernel lock completely in Linux 2.6.37. That is it’s now possible to compile a configuration without any BKL use. There is still some code depending on the BKL,

but it can be compiled out. But is that a big deal?

First some background: Linux 2.0 was originally ported to SMP the complete kernel ran under a single lock, to preserve the same semantics for kernel code as on uni-processor kernels. This was known as the big kernel lock.

Then over time more and more code was moved out of the lock (see chapter 6 in LK09 Scalability paper for more details)

In Linux 2.6 kernels very few subsystems actually still rely on the BKL. And most code that uses it is not time critical.

The biggest (moderately critical) users were a few file systems like reiserfs and NFS. The kernel lockf()/F_SETFL file locking subsystem was also still using the BKL. And the biggest user (in terms of amount of code) were the ioctl callbacks in drivers. While there are a lot of them (most drivers have ioctls) the number of cycles spent in them tends

to be rather minimal. Usually ioctls are just used for initialization and other comparatively rare jobs.

In most cases these do not really suffer from global locking.

This is not to say that the BKL does not matter at all. If you’re a heavy parallel user of a subsystem or a function that still needs it then it may have been a bottleneck. In some situations — like hard rt kernels — where locking is more expensive it may also hurt more. But I suspect for most workloads this wasn’t already the case for a long time,

because BKL is already rare and has been eliminated from most hot paths a long time ago.

There’s an old rule of thumb that 90% of all program run time is spent in 10% of code (I suspect the numbers are actually even more extreme for typical kernel loads). And that should only tune the 10% that matter and leave the rest of the code alone. The BKL is likely already not in your 10% (unless you’re unlucky). The goal of kernel scalability is to make those 10% run in parallel on multiple cores.

Now what happens when the BKL is removed from a subsystem? Normally the subsystem gains a code lock as the first stage: that is a private lock that serializes the code paths of the subsystem. Now using code locks is usually considered

bad practice (“lock data, not code”). Next come object locks (“lock data”) and then sometimes read-copy-update and other fancy lock-less tricks. A lot of code gets stuck in the first or second stages of code or coarse-grained data locking.

Consider a workload that tasks a particular subsystem in a highly parallel manner. The subsystem is most

of the 90% of kernel runtime. The subsystem was using the BKL and gets converted to a subsystem code lock.

Previously the parallelism was limited by the BKL. Now it’s limited by the subsystem code lock. If you don’t have

other hot subsystems that also use the BKL you gain absolutely nothing: the serialization of your 90% runtime is still there, just shifted to another lock. You would only gain if there’s another heavy BKL user too in your workload, which is unlikely.

Essentially with code locks you don’t have a single big kernel lock anymore, but instead lots of little BKLs.

As a next step the subsystem may converted to more finegrained data locking. For examples if the subsystem has a hash table to look up objects and do something with them (that’s a common kernel code pattern) you may have locks per hash bucket and another lock for each object. Objects can be lots of things: devices, mount points, inodes,

directories, etc.

But here’s a common case: your workload only uses one object (for example all writes to the same SCSI disk) and that code is the 90% runtime code. Now again your workload will hit that object lock all the time and it will limit parallelism.

The lock holding time may be slightly smaller, but the fundamental scaling costs of locking are still there. Essentially you will have another small BKL for that workload that limits parallelism.

The good news is that in this case you can at least do somethingabout it as kernel user: you could change the workloads to spread the accesses out to different objects: for example use different directories or different devices. In some cases that’s practical, in others not. If it’s not you still have the same problem. But it’s already a large step in the right

direction.

Now a lot of the BKL conversions recently were simply code locks. About those I must admit I’m not really excited.

Essentially a code lock is not that much better as the BKL. What’s good is data locking and other techniques. There

are a few projects that do this like Nick Piggin’s work on VFS layer scalability, Jens Axboe’s work on block layer scalability, the work on speculative page fault and lots of others. I think those will have much more impact on real kernel scalability than the BKL removal.

So what’s left: The final BKL removal isn’t really a big step forward for Linux. It’s more a symbolic gesture, but I prefer to leave those to politicians and priests.

The 2.6.35.10 longterm Linux kernel has been released

2.6.35.10 is out. Since it contains security fixes all 2.6.35 users are encouraged to update. Get it at

ftp://ftp.kernel.org/pub/linux/kernel/v2.6/longterm/v2.6.35/ or from git://git.kernel.org/pub/scm/linux/kernel/git/longterm/linux-2.6.35.y.git Full release notes.

Thanks to all contributors and patch reviewers.